Precision Isn’t Optional

Reliability and performance define success in aerospace, robotics, and advanced manufacturing. In these domains, failure isn’t an inconvenience—it’s a mission-ending event. Traditional monitoring systems fall short, leaving engineering teams reactive rather than proactive. To stay ahead of failure, you need more than monitoring. You need unified hardware observability.

Hardware observability isn’t a buzzword—it’s a strategic imperative. It unlocks visibility into the deepest layers of complex machines, surfacing anomalies before they cascade into critical failures. This approach shifts engineering teams from firefighting to preventing problems before they start.

This article breaks down the fundamentals of hardware observability: what it is, how it works, and why it’s essential for teams managing mission-critical systems. Whether you're tuning an autonomous vehicle or maintaining hypersonic flight performance, observability is the foundation for operational dominance.

Monitoring is Obsolete

Traditional monitoring systems rely on predefined metrics and alerts. They tell you when something goes wrong—but not why. That limitation is acceptable in simple environments. In complex, high-performance hardware, it’s a liability.

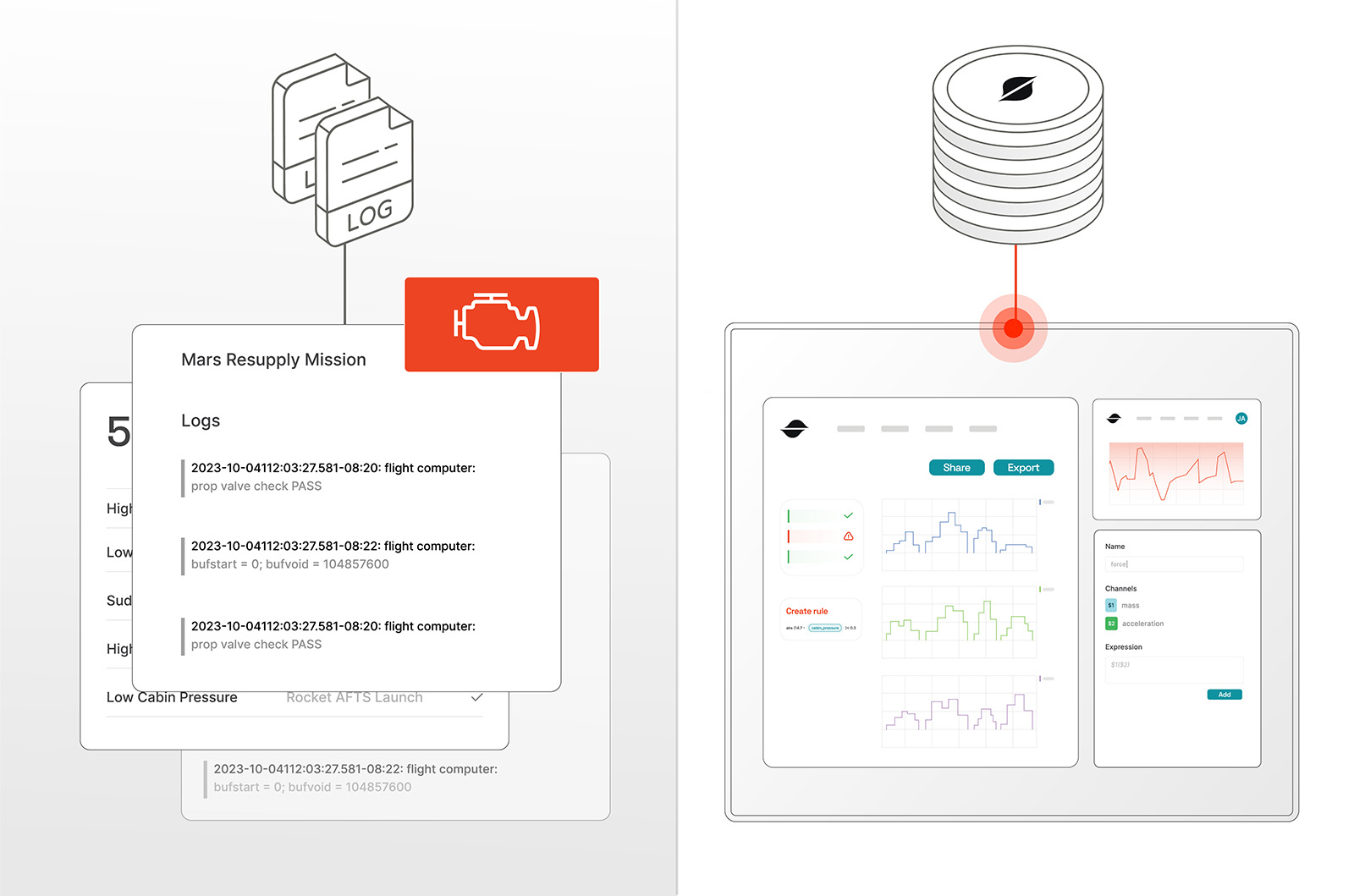

- Monitoring is like a check engine light—it signals an issue but offers no diagnostic insight. Engineers must dig through logs and dashboards, hoping to pinpoint the problem.

- Observability is dynamic. It aggregates system-wide data—telemetry, logs, and traces—giving engineers real-time, root-cause analysis. Instead of guessing, teams know exactly what happened and how to fix it.

For modern hardware development observability isn’t optional—it’s the difference between reacting to problems and preventing them from ever occurring.

Observability isn’t optional—it’s the difference between reacting to problems and preventing them from ever occurring.

Cracking Open the Black Box

Complex systems generate vast amounts of data. Without structured observability, that data is noise. The key is turning raw machine telemetry into actionable intelligence.

The Three Pillars of Observability:

- Telemetry Streams – The continuous flow of high-frequency data from sensors, actuators, and onboard systems. This includes machine data, capturing real-time system behavior and performance over time.

- Event Logs – Discrete events that provide context to telemetry streams, offering insight into state changes, anomalies, and system interactions. These logs serve as a reference point for understanding what happened and when.

- Institutional Knowledge– Codified system behaviors and relationships between subsystems. Stateful rules capture engineering expertise, ensuring continuity across teams, automating anomaly detection, and maintaining a structured record of expected system interactions over time.

These pillars form the foundation of hardware observability. Without them, engineering teams are left flying blind.

Unlocking the Potential of Modern Machines

Sift was built to solve the complexity problem of modern machines. From aerospace to autonomous systems, today’s hardware generates massive volumes of data that traditional tools can’t manage. Without real-time insight, engineering teams are forced into reactive firefighting, unable to harness the full potential of their machines.

Observability changes that. By turning raw data into structured intelligence, it enables:

- Proactive Anomaly Detection – Defining nominal behavior and flagging deviations before they escalate into mission-critical failures.

- Faster Root Cause Analysis – Eliminating guesswork, pinpointing faults in real time, and reducing downtime across engineering workflows.

- Fault-tolerant Collaboration – Ensuring that engineering, operations, and leadership work from a shared source of truth.

- Automated Reporting & Certification – Streamlining compliance and validation with automatically generated, audit-ready reports.

This is why we built Sift—to replace fragmented, legacy tooling with a single, purpose-built observability platform. By integrating data ingestion, storage, real-time visualization, and intelligent automation, we enable teams to move beyond monitoring and take control of their systems with confidence.

This is why we built Sift—to replace fragmented, legacy tooling with a single, purpose-built observability platform.

The Complexity Crisis: Why Observability is Essential

Modern machines are more advanced than ever, but the tools used to build, test, and operate them haven’t kept pace. Despite rapid advancements in software, hardware processes remain surprisingly manual and fragmented, leading to inefficiencies, higher costs, and slower development cycles. This complexity manifests in three key areas:

- Complexity of Individual Machines – Modern hardware, from rockets and autonomous vehicles to satellites and robotics, consists of millions of interconnected components, each generating vast amounts of telemetry. These systems must meet strict safety and reliability standards, yet traditional diagnostic tools are often inadequate, leading to blind spots in performance and failure analysis.

- Complexity of Organizations – Developing, manufacturing, and operating these machines requires collaboration across thousands of engineers, technicians, and operators, each with different levels of expertise. Unlike software teams, which leverage structured workflows for version control and collaboration, hardware teams lack a unified framework to efficiently share, review, and act on data at scale.

- Complexity of Fleets – The rise of high-volume, distributed machine networks—such as satellite constellations, autonomous drones, and smart industrial systems—creates an even greater challenge: managing fleets of assets in real time. Without scalable observability, organizations struggle to monitor performance, detect anomalies, and coordinate maintenance across hundreds or thousands of machines simultaneously.

Observability is the key to overcoming these challenges. It provides a unified, structured approach to managing data across individual machines, teams, and fleets—ensuring that organizations can transition from reactive troubleshooting to proactive, insight-driven decision-making. However, implementing observability at scale is not without its obstacles.

The Challenges of Scaling Observability

As machine complexity increases, so do the challenges of achieving meaningful observability. Without the right strategy, teams will struggle with:

- Fragmented and Siloed Data – Engineering teams operate across multiple disconnected tools, making it nearly impossible to correlate data across design, testing, and operations. Sift unifies observability across the entire machine lifecycle, eliminating data silos.

- High Data Volume and Complexity – Modern machines generate terabytes of telemetry per day. Without scalable storage and retrieval, critical insights get lost in the noise. Sift is purpose-built to manage high-cardinality telemetry at scale.

- Skill and Knowledge Gaps – In-house observability solutions require specialized expertise to maintain. 77% of organizations struggle to scale internal tools, leading to inefficiencies and hidden costs. Sift eliminates this burden with an AI-native platform designed for engineers.

- Escalating Infrastructure Costs – Custom-built observability stacks lead to runaway costs in storage, compute, and maintenance. Sift optimizes cost by decoupling storage and compute, ensuring long-term scalability without exponential overhead.

- Operational Inefficiencies – Legacy monitoring solutions create unnecessary complexity, forcing engineers to spend time managing tools instead of solving real engineering problems. Sift enables teams to focus on accelerating development by automating data review and anomaly detection.

The future of machine engineering depends on a new paradigm—one where observability is built into every stage of the machine lifecycle, not treated as an afterthought.

For more insights on challenges, view the 2024 Aerospace Observability Report

Real-World Impact: The Helium Incident

One aerospace team using an observability platform was able to prevent a mission delay due to an unexpected flight computer malfunction. During fueling, their system detected irregular oscillations in the quartz crystals controlling the flight computer’s clock. The root cause? Helium molecules from the tanks had seeped into the electronics, disrupting the system’s timing.

A traditional monitoring setup—limited to predefined dashboards—would have missed this entirely. Observability surfaced the anomaly in real time, allowing engineers to resolve the issue before it derailed the mission.

This is the power of observability: surfacing the unknown before it becomes a critical failure.

Take Control

The most advanced machines in the world demand equally advanced observability. Sift provides a comprehensive solution to the complexity problem by addressing risk, time, and cost at every stage of the machine lifecycle. By automating data review, anomaly detection, and diagnostics, Sift increases test cadence, captures institutional knowledge, and leverages AI-powered insights to surface hidden dependencies. This accelerates development cycles, reduces manual inefficiencies, and enables teams to collaborate seamlessly across engineering disciplines. At the infrastructure level, Sift optimizes data storage and compute costs, eliminating the need for costly, redundant observability stacks.

If you’re ready to move beyond outdated monitoring and take control of your data, Sift provides the tools to make it happen. With real-time insights, intelligent automation, and scalable infrastructure, our platform ensures that your systems perform flawlessly.