Overview

To ensure rigorous testing of human spaceflight rockets and capsules, industry leading aerospace firms employ a unified system for telemetry data management. (Read about our SpaceX heritage here) This integrated approach stores and visualizes telemetry from all tests while applying consistent, automated checks across HOOTL (hardware out of the loop), HITL (hardware in the loop), and vehicle data. By streamlining the review process through integrated software tools, you maintain high standards and enable comprehensive analysis across all testing phases.

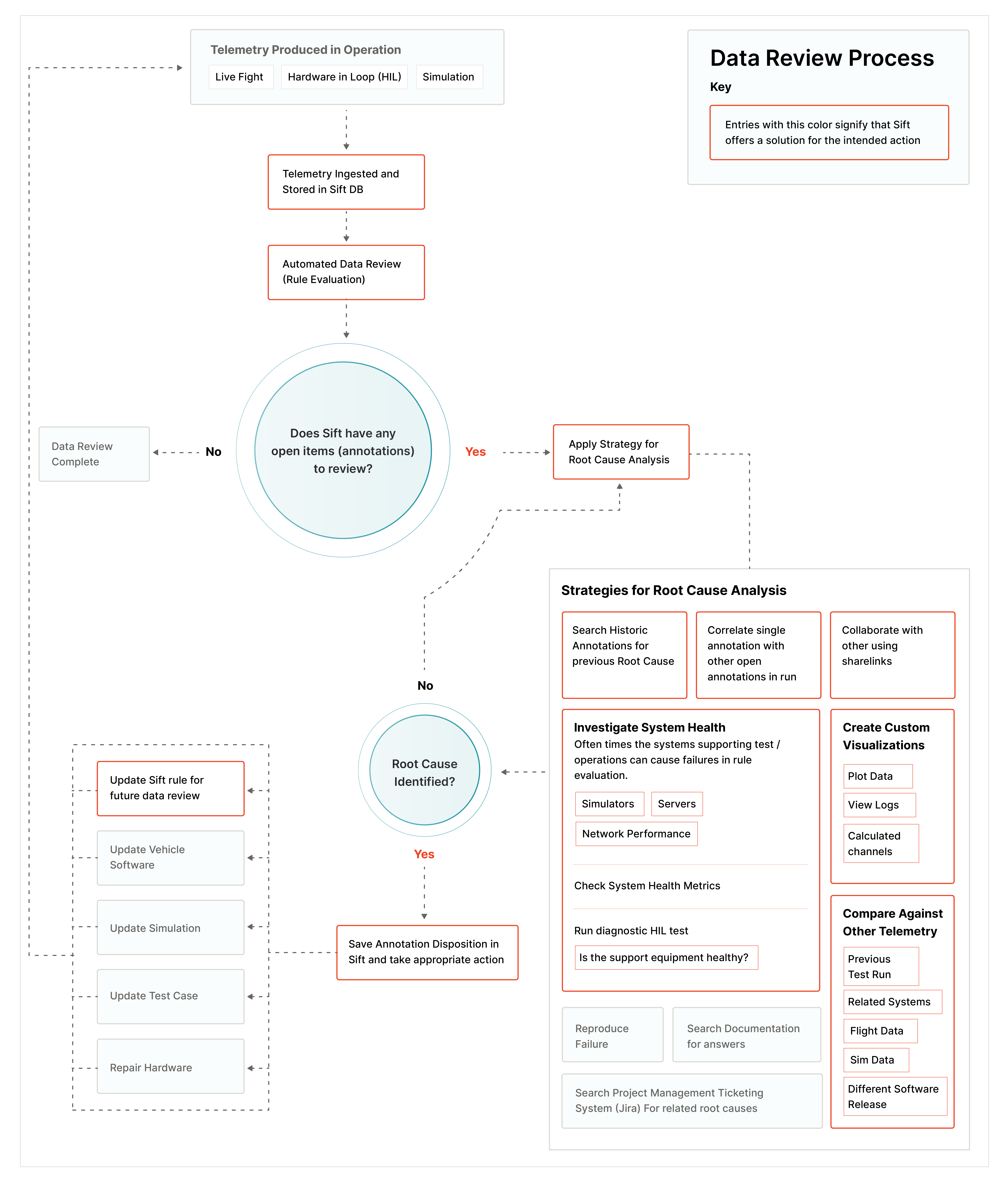

The Data Review Process

The data review process is continuous, occurring for every HOOTL, HITL, or vehicle operation. Thanks to a unified telemetry system and consistent rule application, over 99.99% of automated checks pass. Low-fidelity tests like simulations often “auto-pass” in the Continuous Integration (CI) system without requiring human intervention. In these cases, telemetry flows through Sift for ingestion, storage, and review without a Responsible Engineer (RE) needing to visualize it.

REs typically only engage in telemetry exploration when investigating automatically flagged failures. This nonlinear root cause analysis process may involve various steps, depending on the nature of the failure:

- Searching historical annotations for previous root causes

- Correlating the failure with any others that might have occurred at the same time

- Sending a sharelink of the failure to a team member for assistance

- Investigating the performance of the test asset (if applicable) by running a diagnostic test on the Hardware in the Loop (HITL) table or by checking the telemetry channels that correspond to sim performance

- Creating custom visualizations from (1) other relevant raw channels, (2) vehicle, sim, or test runner logs, and (3) derived telemetry channels

- Plotting the relevant data

- Comparing the failure to past telemetry by overlaying historical runs from either (1) telemetry from the same asset, (2) telemetry from the same software release, or (3) telemetry from the same test scenario

- Attempting to reproduce the failure by re-running the test

- Searching the code base and associated documentation

- Searching the ticketing system for previous root causes

Once the root cause of an automatically flagged failure is identified, the (RE) creates a ticket and implements one of the following fixes:

- Tune the data review rule to account for the newly surfaced edge or corner case,

- Update the vehicle software to fix a bug,

- Update the simulation to increase the fidelity, or

- Change the scenario that the test runner orchestrates.

This ongoing process of investigating auto-flagged failures provides REs with deeper insights into vehicle behavior. As they gain understanding, they refine automatic rules, gradually reducing the need for manual intervention in the review cycle.

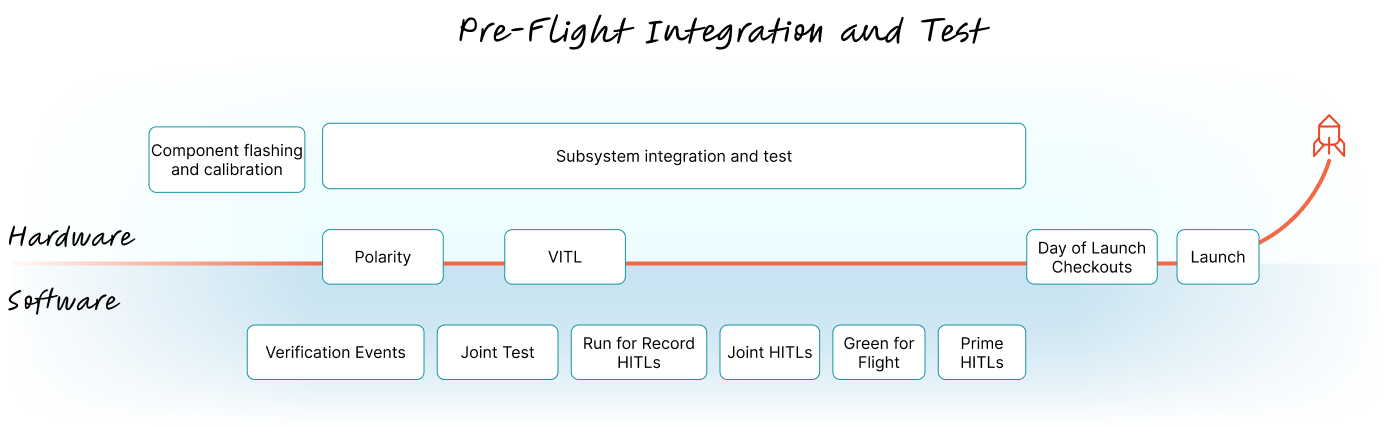

Pre-Flight Test Campaign

Hardware Test and Integration

Component Flashing and Calibration

During the initial build process, each subcomponent is flashed with the most recent firmware version, regardless of its unique manufacturing procedures. While not necessarily the final flight version, this firmware is the most flight-like available, enabling standardized testing.

By integrating telemetry from HOOTL, HITL, and vehicle data, engineers gain deeper insights into vehicle behavior.

Technicians run automated test scenarios using a Python-based test runner. The resulting data feeds into a unified telemetry store for real-time visualization. As tests run, automated checks evaluate the telemetry data, determining specific calibrations for each component.

Small, allowable differences between subcomponents are recorded and stored in the config repository. These calibrations are crucial for correcting measurement biases, enabling standard checks during later integration and mission operations.

Subsystem Integration and Test

Once all components are manufactured, flashed, and calibrated, they're integrated into their respective flight vehicles. Subsystem-wide tests then commence, with Polarity being the most fundamental. Polarity verifies proper mapping between software and hardware for Guidance, Navigation, and Control (GNC) sensors and Propulsion system devices. For example, if the Inertial Measurement Unit (IMU) tilts in the -X direction, the software should command engines to fire towards +X to correct it.

Vehicle data feeds into the same telemetry store, undergoing consistent, automated data review checks. REs investigate any failures, and the vehicle only proceeds to the next test after all issues are resolved.

The continuous data review process supports rigorous testing, from initial hardware checks to final pre-launch operations.

Vehicle in the Loop Test

Vehicle in the Loop Test (VITL) commences after all prerequisite subsystem tests are completed and passed. VITL encompasses a range of tests, from subsystem checkouts to full mission scenarios. A key component are the delay tests, which evaluate latency between flight computer commands and thrusters firing. These tests ensure that algorithms on the flight computer and Remote Input/Output (RIO) devices can efficiently execute crucial vehicle commands within precise latency thresholds.

All test data is fed into the same telemetry storage for automated review. REs investigate and resolve any failures before proceeding to mission scenarios. These scenarios run through the entire nominal mission and critical contingency situations, verifying that recent software changes haven't introduced regressions.

Throughout VITL, the process remains consistent: all data goes to the same storage, undergoes the same automated checks, and any failures are reviewed by REs.

Day of Launch Checkouts

As the vehicle sits on the launch pad, final automated subsystem checkouts commence. Launch proceeds only after dozens of tests and thousands of associated checks either auto-pass or are root-caused by engineers with a flight rationale. Operators overseeing the vehicle's final pre-launch phase alert REs upon test completion. All team members, whether in Mission Control or not, participate in reviewing and certifying the vehicle for flight. The automated review system handles most of the data analysis, escalating only the failures to the engineers preparing the vehicle for launch.

Software Test

Polarity

Prior to the vehicle Polarity event, flight software undergoes Polarity dry run tests on HITL testbeds. These tests replicate the exact procedures planned for the vehicle in a fully simulated environment. The resulting data feeds into the standard telemetry store and undergoes the usual automated checks. Any testbed failures prevent the vehicle Polarity test from proceeding. Only after all automatically flagged failures are investigated and deemed acceptable is a Polarity software release created for hardware integration teams.

Verification Events

Separate from hardware workflows, flight software undergoes certification for all new mission-related changes with the regulatory agency. A meticulous process identifies which tickets require formal certification. Safety-critical changes are implemented first, followed by Verification Event (VE) testing.

VE testing focuses on thoroughly regression testing "must work" and "must not work" functions affected by each software change. This involves running mission or failure scenario simulations and HITLs to exercise each required function. All test data is sent to the standard telemetry store and undergoes the same automated review process, ensuring consistency across all testing phases.

A unified approach to telemetry ensures consistent rule application and comprehensive analysis across all testing stages.

Joint Test

Joint Tests, a subset of Verification Events, focus on vehicle communication systems. These tests simulate interactions between the company’s vehicle the engineers conduct and third-party spacecraft. Prior to actual Joint Tests, SpaceX conducts dry runs on HITL testbeds with simulated third-party assets. The software release for Joint Test is created only after all automatic checks pass or are properly dispositioned.

Joint Tests evaluate every nominal communication path between SpaceX and third-party vehicles, as well as responses to common failure scenarios. Strict test procedures govern the evaluation of each communication path and failure response. As with all other tests, SpaceX data from Joint Tests feeds into the standard telemetry store and undergoes the established automated review process.

VITL

VITL (Vehicle in the Loop Test) serves as the final deliberate milestone between hardware integration and flight software teams before the final software release. Its primary goal for software teams is to establish parity between HITL testbeds and the actual vehicle, ensuring subsequent HITL tests accurately represent flight conditions.

Preparation involves VITL dry runs on HITL testbeds, modified to simulate vehicle operating restrictions in the clean room (e.g., no RF signal emissions, no firing engines). Subsystem REs scrutinize flagged failures from the automatic review tool, using telemetry storage and visualization tools to investigate any concerning behavior. VITL proceeds only after all REs approve the dry runs.

Standalone Run for Records

Standalone Run for Record (R4R) tests commence after all vehicle configuration parameters are double-verified by relevant REs. These tests encompass full nominal mission scenarios and the most critical failure scenarios. R4Rs use verified configurations, closely resembling flight software loads, and are used for formal delivery to regulatory bodies.

While Verification Events (VEs) focus on specific safety-critical changes, R4Rs provide holistic proof of the vehicle software's flight readiness. Consistent with previous stages, all telemetry data undergoes the same storage and review process, with REs investigating any automatically flagged failures.

Joint Run for Records

Joint Run for Records (R4Rs) parallel Standalone R4Rs but involve multiple vehicle HITL testbeds running the most flight-like software. These tests explore nominal and failure scenarios for multiple company vehicles operating simultaneously. Unlike previous tests using vehicle simulations, Joint HITLs physically connect testbeds to verify simulation accuracy. These Joint R4R tests also differ from those in the Joint Test milestone because they include only the company’s own flight hardware testbeds. Responsible Engineers (REs) from all vehicle groups investigate failures identified through the standard telemetry store and automated review process.

Green for Flight

Green for Flight (GFF) tests represent the most comprehensive suite of mission scenario tests for the software. Hundreds of tests, categorized by subsystem, mission phase, or stress scenarios, run in simulated or HITL environments based on required fidelity. These tests exhaust every conceivable failure scenario for each subsystem across all mission phases.

Flight software teams run this full battery of tests and review all GFF results for their internal engineering judgment, sharing them with regulators more from a policy of openness rather than a contractual requirement. Like R4Rs, GFF tests only proceed after all configurations are approved by subsystem REs. Data from these tests undergoes the standard telemetry storage and review process.

“Prime” HITLs

By the time R4Rs and GFF tests are completed, most configurations have been reviewed, but some "Day of Launch" (DOL) configs remain to be updated. These include final vehicle mass properties and trajectory parameters. Once all DOL configs are committed, a final round of subsystem checkouts and "Prime" HITLs are conducted.

"Prime" HITLs test all nominal mission scenarios using the exact launch software. After the automated review tool's identified failures are addressed by relevant REs, the flight software RE creates the final software release for launch.

Want to dive into your specific use case and see Sift in action? Request a demo here.